In web applications it’s not rare to face performance issues that we can’t quite understand. Especially when working with databases, we treat them as this huge “black box” that 99% of the times works amazingly without us even caring about it. Heck, we even use stuff like ORMs that essentially “hide” our interaction with the database, making us think that we don’t need to care about this stuff.

If you’re developing something small, contained, simple then this is probably the case. Your database will most likely perform OK no matter how poorly designed or configured it might be. You won’t have any issues using “naive” queries built by ORMs, everything will work just fine.

However, as your application grows, then the database is something that can make or brake you. It’s one of the hardest things to scale (you can’t just spin up multiple instances), it’s hard to re-design or migrate and essentially it’s the core of your application, serving and storing all your data. Hence its performance is critical.

In this post I’ll showcase a real-life example of debugging a seemingly weird database performance degradation. While I obviously intend to share the solution and what to avoid, I’d also like to take you through the journey and show you some tools & processes that can help you dig into SQL performance.

Let’s go!

The system & the problem

The database we’ll be studying is an AWS Aurora RDS (PostgreSQL 12). It is a clustered database and has two replicas, a reader (read-only replica) and a writer. AWS Aurora is pretty close to an actual PostgreSQL with some zero-lag replication capabilities on top (and some managed features of course). The whole process discussed here should apply to a self-managed RDS PostgreSQL as well.

The problem we will be studying is the (seemingly) random poor performance of UPDATE / INSERT statements. This

was observed in a specific table, that had ~20000000 rows and 23 indexes.

So while, most writes (>99.99%) take <10ms to complete, some statements were taking more than 40 seconds. Some even

ended up being killed by the statement_timeout setting (which was set at 100s!). It was baffling to say the least.

Assumptions

Since this wasn’t consistently reproducible, several assumptions were made:

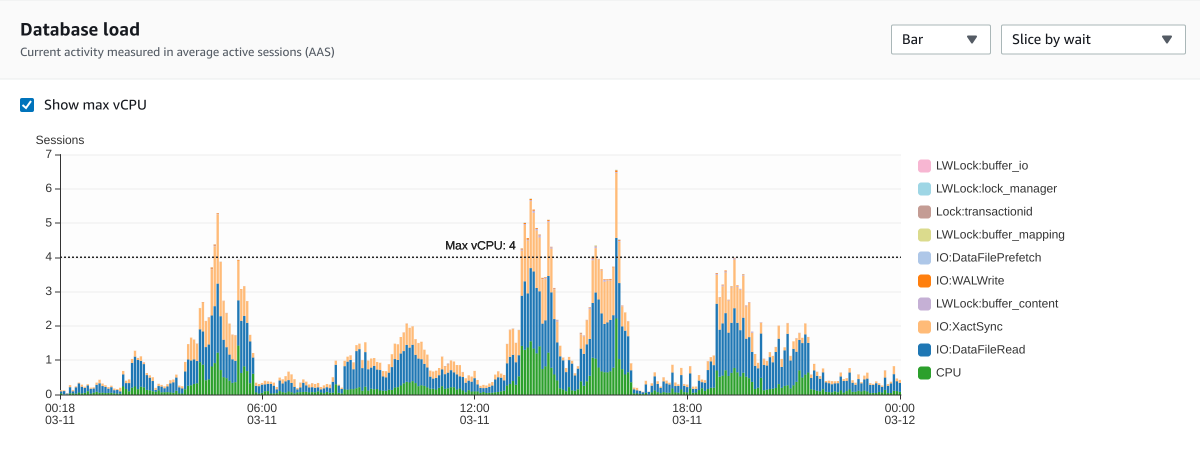

Too much write volume

The first assumption was that there was just too much write volume on the database. While this is partially true, our evidence didn’t support that this could be the root cause of the problem since it occured uniformly both in periods with high write volume but also in periods where write volume was minimal.

Slow writes occured both in idle times (06:00 - 08:00) and in high load intervals (13:00 - 15:00) with similar frequency

Batch writes

Quite often the application was writing rows in batches. This was also considered a possible cause and batched writes were removed. However, the problem remained (and the performance overall was worse when writing one row at a time).

Too many indexes

This table had numerous access patterns, and hence it required numerous indexes to perform well. It had 23 B-Tree indexes, 6 Foreign-key constraints, 3 BRIN indexes and 1 GIN index (for full text search). While it is clear that indexes play a role in write performance (since for every write you need update every index), but this didn’t explain why most updates were really fast and some excruciatigly slow.

Locks

The last assumption was that there maybe were competing locks in the database. Specifically, maybe some long running processes opened big transactions and locked resources for a long time. Then, other writes were waiting to update the locked rows and couldn’t finish. This seemed like a good assumption and it couldn’t be disprooved with the data at hand. So it was time for further investigation

Validating

To help us check our assumptions, PostgreSQL offers some tools. Those can be enabled via its configuration (postgresql.conf or the

RDS parameter groups in AWS). Some interesting options are:

log_lock_waits: Enabling this will instruct the Deadlock Detector to log whenever a statement exceedsdeadlock_timeout. There is no performance overhead enabling this, since the Deadlock Detector should be running anyway, and if it is, then it’s practically free (source)log_min_duration: This will log queries running for more than X msauto_explain: This is actually a number of configurations (auto_explain.log_min_duration,auto_explain.log_analyzeetc). They control when and how PostgreSQL will automatically perform anEXPLAINon running queries. Those are useful as a precaution too, to make sure that poorly performing statements will leave traces & query plans for you to debug. You can read more herelog_statement: This is pretty useful. It can enable logging all / most / error statements etc. If you want to find out if something’s wrong with your database, it’s a common practice to set this toallfor some time, gather the output and analyze it with a tool like pgBadger. You can see all the logging-related options here

So, I went to enable those, reproduce the issue and see what the heck is going on.

What is this EXPLAIN you keep mentioning?

In short, EXPLAIN will show us the execution plan the PostgreSQL query planner choses when running a query.

It will show which strateges are used, when it JOINs, which indexes are used and some metrics about costs etc. If you are

not familiar with the EXPLAIN statement, I will be using it a bit below so you can take a quick read at this to get a basic understanding.

Reproducing the issue

However, while testing something irrelevant on our staging instance, I managed to reproduce the issue predictably. To do that, all I had to do was to update 5000 rows on this table. While doing that, once or twice every 5000 updates, an update would take > 40sec!

This was a true blessing, because it ruled out both the “too much write volume” hypothesis (our staging DB had zero traffic) and the long locks as well, since there were no processes locking the rows I was updating.

I performed an EXPLAIN ANALYZE on the problematic query to see what is going on

The query

EXPLAIN (ANALYZE VERBOSE BUFFERS COSTS) UPDATE "my_table"

SET "match_request_id" = 'c607789f-4816-4a38-844b-173fa7bf64ed'::uuid

WHERE "my_table"."id" = 130561719;

The query plan for the fast execution

Update on public.my_table (cost=0.43..8.45 rows=1 width=832) (actual time=2.037..2.037 rows=0 loops=1)

Buffers: shared hit=152 read=1

I/O Timings: read=1.22

-> Index Scan using my_table_pkey on public.my_table (cost=0.43..8.45 rows=1 width=837) (actual time=0.024..0.026 rows=1 loops=1)

Output: (...)

Index Cond: (my_table.id = 130561719)

Buffers: shared hit=4

Planning Time: 1.170 ms

Execution Time: 2.133 ms

and the one for the extremely slow runs

Update on public.my_table (cost=0.56..8.58 rows=1 width=832) (actual time=34106.965..34106.966 rows=0 loops=1)

Buffers: shared hit=431280 read=27724 <----- THIS IS HUGE!!

I/O Timings: read=32469.021

-> Index Scan using my_table_pkey on public.my_table (cost=0.56..8.58 rows=1 width=832) (actual time=0.100..0.105 rows=1 loops=1)

Output: (...)

Index Cond: (my_table.id = 130561719)

Buffers: shared hit=7

Planning Time: 23.872 ms

Execution Time: 34107.047 ms

We could easily note the following:

- the predicted cost was the same in both cases (although running time clearly wasn’t)

- the 2nd case ended up reading ~450k buffers!

The last one was a clear indicator that there was an issue. But I couldn’t figure out what was causing this. I started doing various experiments, hoping to mitigate it. I tried:

- Doing a

VACCUM FULL(ref) hoping that maybe this happened becauseAUTOVACUUMdidn’t function well. Sadly, no result. - Doing an

ANALYZEon the table to force PostgreSQL to update its stats and maybe execute the query more efficiently. Again, no luck. - Dropping and recreating the involved index. This didn’t work either.

At this point I was pretty much out of ideas.

Getting answers

Before giving up, I decided to reach out to the masters. I wrote the following post on the DBA StackExchange, which is a targeted community for Database Administrators and developers working with databases.

What amazed me was that even before I explained the specifics of my case, people were asking in the comments if my table had a GIN index.

Actually, they were pretty sure that it had one. Moreover, they suggested that I had something called fastupdate enabled.

That led me to the documentation (once again). Let’s quote a bit from it:

GIN Fast Update Technique

Updating a GIN index tends to be slow because of the intrinsic nature of inverted indexes: inserting or updating one heap row can cause many inserts into the index (one for each key extracted from the indexed item). As of PostgreSQL 8.4, GIN is capable of postponing much of this work by inserting new tuples into a temporary, unsorted list of pending entries.

This described our case 100%. Most writes, since they didn’t trigger a cleanup of the pending list, were blazing fast. However, when the

pending list cleanup was triggered (its size grew more than gin_pending_list_limit) the process that performed the write blocked until

the pending list was cleaned up and the index was synchronized.

The action that triggered a cleanup blocked until the contents of the list were persisted to the index. Note that SELECTs also have to go through the pending list contents, which are unsorted so there may be a performance overhead there as well

Experimentation

I went on to check if my index had fastupdate set. This is an option in the index storage parameters. To check that, you can use

\d+ <index_name> in psql. I didn’t see anything there, but reading up on the CREATE INDEX command

I noticed that fastupdate was ON by default. I switched it off to do some tests:

ALTER INDEX <index_name> SET (fastupdate=off);

Changing index storage parameters

Be careful when running statements like the one above. For one, this will trigger a lock until the index storage parameters are changed.

Moreover, disabling fastupdate means that you will manually have to cleanup the pending list too (using SELECT gin_clean_pending_list()) or

rebuild the index (using REINDEX). Both cases will probably cause performance or integrity issues in a production system, so be careful.

Voila! The problem was gone. Every write took the same, predictable time. However, as expected, it was noticably slower. So I was reluctant

to consider disabling fastupdate altogther.

Possible solutions

At this point, a complete solution had been submitted in my StackExchange post and I saw some other more viable options:

- I could run

VACUUMmore aggressively, hoping that it will cleanup the pending list on the background and my queries will never trigger a cleanup. However, I don’t think this would be 100% reliable again. - I could set an even higher

gin_pending_list_limit(default: 4MB). This would mean that cleanups would be really rare but it could impactSELECTstatements (they have to read the pending list too) and if a cleanup occured it would take huge amounts of time. - I could set a background process to perform a

SELECT gin_clean_pending_list()periodically. However, much like option 1 this would not guarantee anything - I could set a smaller

gin_pending_list_limitso that cleanups are more often but take less time.

I decided to go with the last, and ran some experiments to see how this would impact the system. Out of curiosity, I even dropped the index to see how much it affected write performance. You can see some results below:

| no GIN index | GIN index (without fastupdate) | GIN index (with fastupdate & threshold 4MB) | GIN index (with fastupdate & threshold 128KB) | |

|---|---|---|---|---|

| 1 update: best case | < 1 ms | 50 ms | < 1 ms | < 1 ms |

| 1 update: worst (observed) case | 3m 10s (with gin_pending_list flush) | 4 s (with gin_pending_list flush) | ||

| 5000 updates (non-batched) | 2min | 6m 30s | 7m | 7m |

| 5000 updates (batched, 20) | 1min | 7m | 7m | 7m |

Some interesting insights:

- Average time of inserting 5000 rows is the same without

fastupdateand with any size ofgin_pending_list_limit, which is expected. - Updates that don’t trigger a cleanup take the same time, no matter how big or small

gin_pending_list_limitis (again, expected). - With a value of 128KB, updates that triggered a cleanup took 4sec, which was very tolerable

- When the index was dropped, we saw a huge performance boost (3x faster with non-batched updates and >6x faster with batched!)

Solving the issue

By experimentation, 128KB seemed like a good value. So I chose to proceed this way.

Now, there were various ways to set the gin_pending_list_limit:

- Via

postgresql.conf(or DB parameter groups in AWS RDS). This affects all GIN indexes. In AWS RDS it doesn’t require a restart (it’s a dynamic parameter). However, if you’re running a self-managed PostgreSQL you’ll most likely need to restart for the changes inpostgresql.confto take effect - By altering the index storage parameters (

ALTER INDEX <index_name> SET (gin_pending_list_limit=128)). But this could cause a number of issues (see the note above) - By altering the

gin_pending_list_limitfor the specific user (ALTER USER <user_name> SET gin_pending_list_limit=128). This would affect all new connections and wouldn’t require a restart.

Personally I’d choose the first one. In this case I had to go with the latter because of some unrelated issues. But they all would do the trick.

The next day

After monitoring for 1 week, there were no random failing writes which was an amazing relief since the issue had been there forever. The whole process took about a week and apart from gaining knowledge on GIN index internals, it also provided some insight on how much a GIN index can affect write times and triggered a discussion for reconsidering full text search in PostgreSQL.