This is the first in a series of posts

While building my home lab, I came across a lot of posts from people who had built similar things. However, I always felt that I was missing either the context (why do this thing in this particular way) or the details, implementation or otherwise. By splitting this into different posts, I hope to provide the full context of each decision I make, along with a comprehensive & complete guide so that you can do the same.

This first post covers the reasoning behind it all and why I’m doing it in that particular way, so it’s a bit high-level and introductory. The next ones will be more technical and closer to actual tutorials.

I will be updating this post with links to other related posts as they go live.

Illustrations were created by Copilot Designer (DALL·E 3). Expect AI randomness!

Introduction

Ever since I started working in tech, I’ve been fascinated by automation and standardization. I’ve always admired efficient CI / CD pipelines, scalable & configurable infrastructure that can be provisioned “at the touch of a button” and having repeatable setups to host your services on.

When I started out, it wasn’t uncommon for people to deploy their services on bare metal servers that were provisioned once and then lived forever. These servers were difficult to maintain, monitor, upgrade etc and a number of solutions were developed by system administrators to work with them. It all though felt very cumbersome, at least from my (undoubtedly limited, I’ll give you that) developer’s point of view. I always felt that there would eventually be a better way.

Over the years, many people would manage /deploy their apps with technologies like Ansible, or develop sets of scripts to provision devices & servers for specific use cases. Some would use (or build) images that came with the dependencies of their apps preinstalled and host their apps off of that. These solutions all worked well, but then came Docker and shortly after one of the best ways to run containers in production, Kubernetes.

This felt like a big change. Development was streamlined, you could spin up a bunch of (micro)services locally and not break your development machine. You could let them interact (almost) as they would in production with little to no overhead. Productivity skyrocketed and the days of “breaking your local env” because you upgraded a package or missed a line of config were pretty much gone (again, not 100%, but definitely significantly reduced).

Docker solved packaging your app.

But then what about production? How would your local env compare to what was running on live? There were a bunch of different “cloud app” platforms, most of which worked really well. From Heroku, one of the first ones (that git push deploy was amazing) to more docker-aware solutions (like AWS ECS). But usually they either made a lot of abstractions / assumptions that would force your application’s architecture, or they were too expensive to run them in a higher scale. After a while, a lot of organizations turned to the more “hands on” solution, Kubernetes (k8s for short).

From the official website:

Kubernetes is a portable, extensible, open source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation.

In short, Kubernetes provides a way to take your containerized application and run it in the cloud. It’s really flexible, highly configurable, and is built from the ground up with scalability in mind. It provides ways to deploy your app, load balance it, and scale it horizontally out of the box. It lets you describe the most complex workflows and deploy them gracefully. It lets you have multiple teams working on it and isolate everyone’s permissions, both at a user and at an application level. And that doesn’t even scratch the surface of what it can do.

But, going back to my love of automation and standardization, the things I probably love most about it are:

- that every bit of it is configurable by code (ehm, by YAML to be fair)

- it’s high level, meaning you don’t have to worry about the internals of the hosts your app will run on

- it’s declarative, meaning you describe the state you want to be in, and it somehow achieves that state, usually quite gracefully (or, if not by default, by offering you a way to configure it to do so).

However, as you would probably expect, k8s does come with a steep learning curve and it does require a fair amount of maintenance. And while it might be a bit much for smaller teams/products, I’ve found that most of the larger companies I’ve worked for are pretty happy with it and would not consider using another technology (especially since it’s possible to use managed k8s clusters to avoid most of the admininstration).

So still, in my book,

Kubernetes solved running your app.

Why I’m doing this

A few things have become increasingly clear to me over the past years and led me to start this project.

To gain exposure / familiarity

Let’s start here: I love these two tools!

Docker is something I work with on a daily basis and I’ve been exposed to it quite a bit. I’ve built some moderately advanced setups with docker/docker-compose and I feel I’m more or less familiar with its fundamentals, best practices and can use it pretty efficiently.

Kubernetes however, not so much. While I do work with it, most apps come with a set of pre-built Helm charts from a much more specialized DevOps / DX / SRE team and I just get to fiddle around with configuring them. While that is more or less the vast majority of what I’m asked to do with them in most situations, I’m not comfortable with having so little & high-level knowledge of the tool used to deploy the apps I’m building.

Hence, I need more meanigful Kubernetes exposure.

AI rendition of Docker and “kninetes” working together

To experiment with more complex architectures

My first challenge as a software engineer was learning to code adequately. Then, building small apps. After that, designing and building small / medium sized services.

Currently I’m exploring how to efficiently design, deploy and scale different services/systems that interact with each other. So, I need an infrastructure to support that and I need it to be an industry standard, something I’ll find out there. Something that can support multiple apps, natively model their interactions, their scaling and their dependencies.

So I need an infra to enable this sort of experimentation.

A “piece code” growing into a “intercarting of ther”. Can’t make this stuff up!

To become more of a T shaped engineer

I’ve spent most of my professional life building Web Applications. More specifically, their backend. Even more specifically, I’ve been building them using Python.

After almost 10 years of working on something so niche, I feel like I’m kind of stagnating. And while there’s always more to learn in the technologies/stack I’ve been using, lately it feels like the return on specializing further is disproportionate to the investment required to do so.

On top of that, I always envisioned myself as a T-shaped engineer. Since I feel like I’ve done a decent amount of deep digging, it’s time to expand my knowledge horizontally. Now, one would think I’d need to explore more frontend technologies, but since I’ve always felt closer to the infra side of things, this feels like an easier step to take.

OK, maybe I’ll become an L-shaped engineer first 😅, but still I need more infrastructure exposure.

An L-shaped Software Engineer. That’s not too bad!

It makes sense to DIY this

Now, a reasonable question would be why not do this with a managed k8s cluster. Most cloud providers offer them, and it would save a reasonable amount of time and effort. And that’s in fact what I did over the past year. I built a managed cluster, and started adding components to it. However, I ran into cost issues.

A very small cluster on DigitalOcean (probably one of the cheapest decent providers out there) with a bare minimum of 2 nodes costs around 36$/month. Add a load balancer for 12$/month, some storage for a few bucks and a managed database for 15$ a month, and you easily get close to 70$ month. That, combined with the fact that I don’t have a dedicated amount of time to spend on the project, but I rather whatever free time I can find here and there on it, increased the cost of having something permanently deployed on a cloud provider significanly. After 1 year of paying for it, I summarized the costs and it was close to what I’d have to spend to build a home lab myself. Plus, building it myself would contribute much more to my goal of improving my infra skills.

This is obviously the dilemma we all face in different aspects of our lives: Buy it or DIY it? But in this case, taking the time to build it myself contributed to a greater goal as well. So it became clear that DIY was the way to go.

A DIY k8s cluster. Don’t ask.

Because it’s fun

Last but not least, I love building things! My favourite toys growing up were LEGOs. I loved the complexity! Some of my happiest childhood memories are when I would get these shipments from my grandparents who lived abroad with LEGOs that were not available in my country. I would spend hours or even days building them, only to never play with them again afterwards because, duh, building them was the most fun part!. I’ve been longing for that feeling ever since, trying to find my “grown up LEGO set”.

25 years later, and being a software engineer, this feels like the closest thing to it.

Huge bonus: I managed to sneak some 3D design/printing to the process, which made it about a million times more fun.

Kids playing with LEGO, adults with software.

So, what are we building?

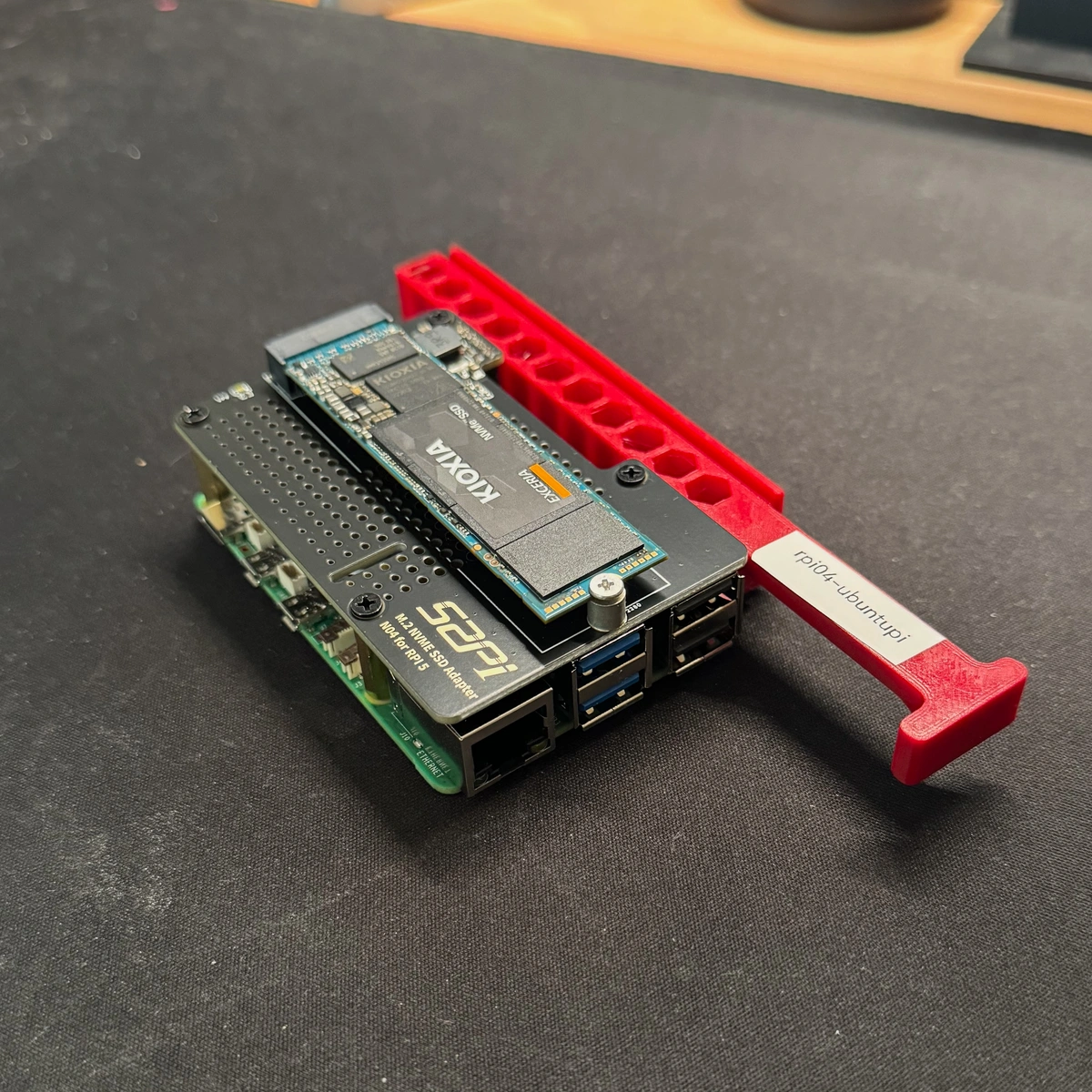

The cluster blinking its lights! Ain’t it pretty?

Right, let’s get to the point!

Here’s what I’m building:

- a k8s cluster on top of 4 (or more) Raspberry Pi 5 nodes, with 8GB of RAM and NVMe storage (via a HAT adapter)

- Ubuntu Server for ARM will be the OS, as it comes with cloud-init and a lot of dependent kernel modules for things like storage etc

- I’ll manage this with either IaC solutions (most likely Pulumi or maybe Terraform) or tools like kubespray (Ansible)

- various components / apps will be deployed with Helm

- I’m aiming to share scripts or parts of my solutions (probably not the whole thing for security reasons)

- I’ll share how I bootstrap the nodes, possibly even some

cloud-initconfiguration to help with that

- I’ll share how I bootstrap the nodes, possibly even some

- Grafana Cloud will be our monitoring tool of choice

- The cluster will include

- load balancer(s) (probably MetalLB), to expose services outside the cluster

- in-cluster certificate signers for HTTPS

- maybe a block storage provider in-cluster (like Longhorn or rook-ceph, still TBD)

- cloud-native database(s) (using solutions like Crunchy-Postgres)

- possibly multiple namespaces, for different apps I’ll be deploying

- a self-hosted container registry, where application containers will be pushed & pulled from

- I’ll also explore security and possibly deploy solutions to enforce it within pods

This is an active project, so things may change. But since it’s been somewhat built already, I am fairly confident about most of the above. Expect changes though, as I build more components and understand the topic model deeply.

Some bonus topics I hope to cover:

- Building the cluster’s rack mount using 3D printing (and designing around our limitations)

- The cost of the entire thing, both building & running, with links to components to help you get started (no affiliate links)

- Network setup, if I manage to buy a proper router (I’ve been eyeing this Mikrotik CCR2004-16G-2S+PC) and build a proper network for my homelab

- Administrative operations, like e.g. adding a new node, shutting down the cluster, backing up a database etc

Lots of stuff, so let’s see where it takes us!

Format

I’ll be sharing separate posts for each thing I’m working on. The format will be something between a journal and a tutorial. This may cause things to get repeated/changed as we go along. I’ll do my best to update older posts if newer ones deprecate them, but most likely things will slip up. I hope to open-source scripts & configuration snippets along the way, but as I said, not everything will be open sourced most likely. I’ll be updating this page with links to individual posts as I go. The timeline I’m aiming for will be 1 post per month, as my time is limited and writing them takes a long time!

Concluding

This has been a long, rather abstract post and thank you for reading this far! While you should probably expect the next ones to be mainly technical, I felt it was important to explain the need for this. Telling people that I planned to run a k8s cluster myself always led to strong “Why?” questions. I believe that explaining my reasons might inspire others to follow this path. I’ll also share the problems I encountered along the way and the effort required to reach each milestone.

If you’re interested in following the process, subscribe to my mailing list to make sure you don’t miss a post. If you’ve already done so, thank you! I’ve never actually sent ut anything, but I hope to start sending out a monthly digest from now on. So now’s a good time to consider it!

See you soon (hopefully) 👋 !